Love Letter Strategy: The replication crisis comes for board game analysis

If you came here looking for real world advice, I think it's all about swiping these days

Also in this series: Monte Carlo simulations of Mexican Train, Crazy Eights/Uno, and Resistance/Avalon

Update: The author of one of the papers I cite responded, see the Appendix.

Love Letter is the board game connoisseurs' Go Fish, an elegant game from a more civilized age. You guess cards sometimes, and every card has a unique effect when played; it’s portable, fast-paced, and allows for some basic deduction that pairs well with any level of BAC1.

The rules can be found here, but as a recap, there are 8 types of card, with ranks 1-8.

Guard(5), which allows you to eliminate a player if you correctly guess their card (guards are not a legal guess)

Priest(2), which allows you to see the card of another player

Baron(2), which allows you to secretly compare hands with another player, eliminating the player with the lower rank, doing nothing on a tie

Handmaid(2), which prevents you from being targeted until your next turn

Prince(2), which allows you to force any player to discard their card and redraw if not eliminated

King(1), which allows you to swap hands with another player

Countess(1), which has no effect, but must be discarded if your other card is a Prince or King

Princess(1), which loses the game if discarded.

Every turn you draw a card, pick a card to play from the two in your hand, and a target if required. Play ends when all but one player is eliminated, or when there are less than two cards undrawn, in which case cards are revealed and the highest remaining card wins. (in two player games, three cards are revealed face up at the start of the game)

Past Analysis

Before I reveal what my simulations show about the strategy of this game, let’s see what everyone else thinks.

The top Youtube Video on Love Letter Strategy offers card-specific advice including trying to guess cards with two copies remaining, preferentially playing priests, and holding handmaids back to protect higher cards.

The top Reddit post offers a similar strategy table, which can be summarized as always trying to play your lower card, but play a priest over a guard.

Fact-check: Mostly True. Outside of specific scenarios, my Monte Carlo simulations back up almost every piece of advice, except they show an edge for playing handmaids ahead of some lower cards. The video/post also doesn’t cover more advanced strategies like weighting Princess guesses late in the game, or making your plays more context-dependent.

A 33-page bachelor’s thesis on Love Letter Strategy trained a dynamic programming agent on Love Letter, and makes the following claim: “Because this strategy always makes the decision that gives the player using this strategy the highest probability of winning, this strategy can be considered an optimal strategy.”

Fact-check: Mostly False. This paper and implementation are excellent (I wish I had read it first, I reinvented many of the same concepts through trial and error), but the dynamic programming agent they created has two weaknesses that keep it from being truly optimal: 1) It learns nothing from its opponents play (even without making assumptions about strategy, play history helps with Princess guesses), and 2) It makes exploitable deterministic moves (e.g. it guesses a Baron when it has a low card, and a Priest with a high card).

Also of interest: their AI endorses playing your lower card on your initial move, with the exception of Priests over Guards, and Handmaids over Priests, which matches my results. (Update: the author of this paper has responded and is agreed that the approach is not truly optimal, see the Appendix)

A 7 page paper on Love Letter compared more advanced AIs to an AI which uses a few basic rules: 1) guessing the opponent’s card when it knows it, 2) preferentially playing lower cards, and 3) guessing the most common card remaining. Comparing this basic approach to more advanced AIs the paper concludes: “its playing strength does not significantly outperform the [rule] based agent. The experiment shows that the game is stochastic and even simple deterministic approaches like [rule] based can win in half of simulations.”

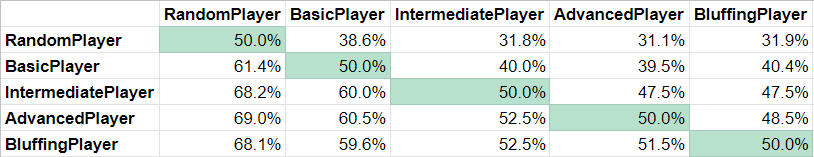

Fact-check: True. The game is highly random (even a player doing random legal plays can win 30% of the time), and optimizations past the ones described above only matter in around 6% of games from my testing.

Strategy Overview

Let’s break the hierarchy of the game’s strategy down in oversimplified meme form.

Below are the same strategies described in slightly more depth, as I implemented them for the AI players, where each strategy expands on the one above. I describe these strategies in more depth in the Appendix.

The Random Player always picks a random legal move, considering Princess discards to be illegal. (the app version of the game typically does not allow them)

The Basic Player takes a win with a Guard/Baron/Prince if it knows it can win immediately, and makes Guard guesses out of the pool of not-revealed cards

The Intermediate Player tries to get rid of their card if it’s guessable and known, plays Barons according to the odds of the remaining cards, and otherwise plays lower cards, with exceptions for Priests and Handmaids.

The Advanced Player increases the likelihood of the other player holding a Princess or King with how late in the game it is (see details below). It also assumes its opponent is not holding all copies of a card it guesses with a Guard, and uses that information to narrow in its guess.

The Bluffing Player deliberately exploits the predictability of the AdvancedPlayer, attempts to duplicate its guessing algorithm, and if it thinks the AdvancedPlayer would guess its card, tries to throw it off the scent by guessing that card themselves. It also derives conclusions about what card the other player has based on their plays, by assuming they are preferentially discarding lower cards.

Now, let's pit them all against each other! The table below shows the odds of the strategy in the row (left), beating the strategy in the column (top) in a single round of the game, based on a Monte Carlo simulation that pits each strategy against each other with a randomized first player over 5 million iterations:

And for completeness’ sake, the performance of the same AIs in a first-to-seven game set, which is how the game is normally scored for two players, which increases the margins:

Full disclosure: I do not consider my most advanced AI to be purely optimal in the sense of hitting a Nash equilibrium where its strategy cannot be exploited; I’ve A-B tested a number of optimizations to make some points about the game’s strategy space, but I have not fully explored the space. Based on my experiments, I suspect a true optimum would involve regressing to find a random/mixed strategy, rather than calculating a single optimal move for each game state which could be exploited to reveal information.

BasicPlayer: Good enough for prod

There’s an official LoveLetter app which supports online play, and comes with a basic AI for single-player games. That AI appears to be extremely similar to my BasicPlayer implementation: it plays cards semi-randomly, avoids obvious mistakes, takes obvious wins (although it doesn’t exploit Baron information optimally) and is generally exploitable by a player following simple rules like playing lower cards.

I saw some early reviews on the app complaining about the AI winning too much; there are no difficulty selectors on the app, but I would guess they wanted to build something players could beat, and making the AI too advanced makes it difficult to have a meaningful edge.

AdvancedPlayer: The Princess and the Monty Hall Problem

Pop Quiz: You’re near the end of a two-player game of LoveLetter, with two cards left undrawn. Over the course of the game your opponent has played 4 cards. Your opponent could have a Princess, a Priest, or a Prince. You’ve never seen their card, and no one has been forced to discard. What are the odds they’re holding the Princess?

Answer: 5/7ths. Intuition would say 1/3rd, since your opponent can only be holding one of three cards, but intuition is a liar. At this stage in the game, 5 cards have passed through your opponent’s hand. If any of them had been the Princess, they would have been forced to keep it and play their other card. Those five cards plus the two cards no one has drawn adds up to 7 possible places the Princess could have been dealt; and if it had been dealt into any of the 5 cards your opponent has encountered, they would be holding it right now.2

The AdvancedPlayer formalizes this calculation and takes it into account when guessing its opponent’s card. It uses the following formula:

OpponentCards = The number of times your opponent has drawn without being forced to discard or revealing their card +1

RemainingCards = The number of cards not yet drawn by either player

Odds of holding a princess = (OpponentCards)/(OpponentCards+RemainingCards)

In the example above, plug in the values 4 and 2, and you get odds of 5/7ths.

The AdvancedPlayer applies the same logic to Kings, as the card is particularly dangerous to play as it exposes your hand and lets your opponent react first. However, this does cause the AdvancedPlayer to incorrectly weight its guesses for players that pick cards randomly. With knowledge of your opponent’s strategy, you could derive similar weighting algorithms about the likelihood of your opponent holding other cards, based on the potential cards that could have passed through their hands.

BluffingPlayer: Bluffing is overrated

For every optimization so far, I A-B tested them to confirm that they deliver an edge relative to the other strategies; where it starts to get interesting is that the BluffingPlayer does worse against weaker AIs than the AdvancedPlayer does (because it makes ineffective moves to protect itself and makes assumptions that aren't always valid), but beats the AdvancedPlayer due to knowing its strategy and optimizing around its play. This edge can be eliminated if the AdvancedPlayer adds randomness to its own strategy (such as by randomly assuming your opponent is bluffing with their guard guess half the time).

Overall, I’m inclined to think bluffing isn’t nearly as strong in LoveLetter as in some other games, given the high degree of randomness in the game. It took a bit of testing to find scenarios where bluffing wasn’t simply handicapping yourself by making a less effective move, and in cases where it was effective, it depended upon knowing your opponent was playing a certain way.

Conclusion

That was a lot of analysis! I suspect some of these strategies hold up less well for more than 2 players (less public information, and the incentives are different, knocking an opponent out with a Baron risks giving away information about your own hand), and the algorithms have room for improvement on finding the right mix on bluffing and drawing conclusions on your opponent's play.

And while bluffing may be overrated as a tactic, bluffing is also where all the fun is! If you’re playing the game to win, you can be a boring robot like the ones I’ve created, but in person, I highly endorse trash-talking, bluffing, and going twelve levels deep in trying to outguess your opponent in a battle of wits that’s still mostly luck.

Oh, and if anyone wants to join a LoveLetter AI Symposium, hit me up.

Appendix: In-Depth strategies

Each AI extends the strategy of the last one, with some tweaks/refinements (e.g. the more specific rules replace the random rules, but the rules are applied in hierarchy order: immediate guaranteed wins are taken before defensive plays are checked)

Random Player:

Pick random legal moves, random targets for princes, random guard guesses, while considering Princess plays to be illegal.

Basic Player:

If you know the other player’s card, guess it with a Guard

If you know the other player has a princess, eliminate them with a prince if you can

If you know you can beat the worst case card the other player has, knock them out. (comparably, if you know you would lose for sure on a Baron play, play your other card)

When guessing with a Guard without certainty, guess randomly out of the remaining cards that are not public knowledge

Never play a prince on yourself when you have the princess

Intermediate Player:

Play a Baron if your card is higher than the median value they can have (not fully optimized)

If they know your card and it wouldn’t put you in a bad spot with respect to playing a King or Baron, ditch it.

Preferentially play lower cards over higher cards, with the exceptions of playing Handmaids whenever you have them unless it’s your final play, and playing priests over guards.

Target your opponent with Princes over yourself

Don’t guess your own card as it lowers your odds of getting a guard guess. After that, preferentially guess from the cards where there are two remaining if you can

Advanced Player:

Weight the odds of the cards you guess with a Guard by how late in the game it is, for Princesses and Kings (via the formula described above)

If your opponent just guessed a card, assume there’s at least one copy of it not in their hand

Bluffing Player:

If you would expect the AdvancedPlayer to guess your card on their next turn and there are guards remaining, guess your own card to throw them off the scent. (this strategy is neutralized by randomly ignoring your opponent’s last Guard guess 50% of the time, which forces the bluffing player to handicap themselves for no benefit)

If your opponent is discarding a high-value card such as the Prince or Countess without having their card known, assume they were forced to in the case of the Countess, or are holding a higher card that would necessitate that decision. (this also handicaps the BluffingPlayer if the opponent doesn’t consistently favor lower cards)

Regarding the 33-page bachelor’s thesis mentioned above, more detail on its exploitability:

It learns nothing from its opponent’s play. Their AI only considers the current state of the game and remaining cards, it does not take into account information that gained from an opponent’s guesses or card play history. My simulations show that an AI that uses this information can outperform one that does not, even without making assumptions about their opponent’s strategy.

For example, when you're about to make a guess late in the game, if your opponent has recently been forced to discard their card, versus if they have cycled through several cards without a discard, they have different odds of holding a Princess, per the formula discussed above.It never bluffs, and makes exploitable deterministic moves. The AI does not take into account the risks from leaking information about its own state, it always attempts the same “optimal” move as if its opponent is ignoring its moves, which would allow its opponent to derive information about its cards.

For example, on its first move playing a guard their AI will guess a Baron if it has a low non-Baron card, and guess a Priest if it has a Prince or higher; an opponent with full knowledge of this strategy can learn information about the AIs card.

Update: A response from Jarno Huibers, the author of the original paper:

Hello Mark Newheiser,

I am indeed the author of that paper which I wrote as my bachelor's thesis. I have read your article and thought it was an interesting and nice read.

I understand what you are saying about the flaws of the dynamic programming agent, and I agree. The agent tries to make an optimal move when looking at the current gamestate in a vacuum. It does not consider its opponents strategy or giving away its own. It considers what move should be made if you were randomly thrown into the middle of a game of Love Letter, with only information about the current game.

However, even so I found your comments about the Monty Hall problem very interesting because this is something I hadn't considered which is also part of the gamestate and could be taken into account by a dynamic programming agent. Doing so could improve the strategy, meaning it is indeed not optimal. I do think I used the term 'optimal' a bit loosely here since I knew the strategy didn't consider past games. Maybe I meant it more as the optimal strategy out of the used strategies for my project.

Thank you for reading my paper and I wish you good luck with any of your future endeavors.

Sincerely,

Jarno Huibers

Braininess At Cards

The proof for the Monty Hall Problem-style “goats” formulation is left as an exercise for the reader. You can read up on the problem, but if you’re skeptical, try it out in some games and observe the results for yourself. https://en.wikipedia.org/wiki/Monty_Hall_problem